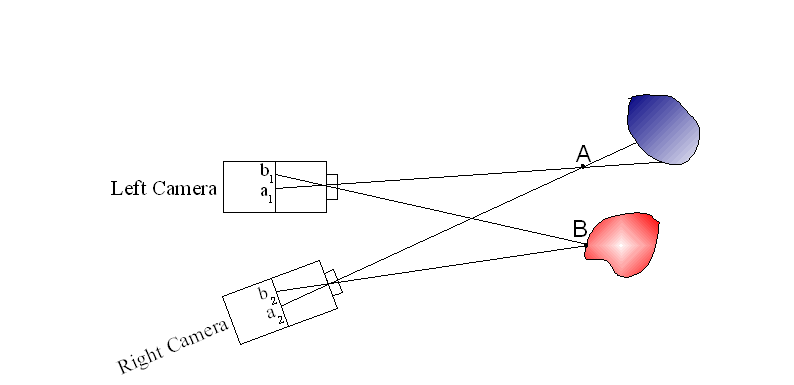

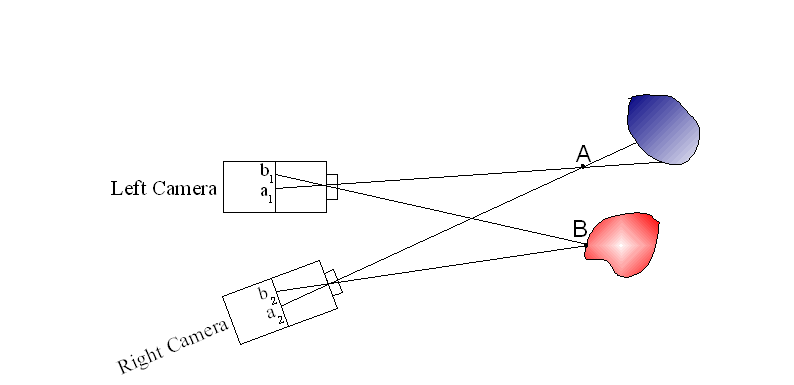

Figure 1: Pixels b1 and b2, projections of fly B, get identical grey levels, while pixels a1 and a2, projections of fly A, which receive their illumination from two different physical points on the object’s surface, get different grey levels.

The Fly Algorithm

In collaboration with Jean Louchet

Artificial vision is a key element in robots autonomy. The Fly algorithm is a fast evolutionary algorithm using pairs of stereo images. It aims to be used in particular in the field of real time obstacle detection and control for mobile robotics and automated vehicles. The Fly algorithm is based on the Parisian evolution who essentially differs from conventional artificial evolution by its semantics. According to the general scheme of Parisian evolution, the problem’s solution is represented by the whole population rather than the best individual alone. The representation of the environment is here split into a large number of simple primitives (the flies), a set of 3-D points which hyopefully gather onto the surfaces of obstacles. These points are evolved following the classical steps of evolutionary algorithms.

Flies and stereovision

The Fly algorithm produces a set of 3-D points which gather on the surfaces of obstacles. A fly is defined as a 3-D point with coordinates (x,y,z). If the fly is on the surface of an opaque object, then the corresponding pixels in the two images will normally have highly similar neighbourhoods (figure 1). Conversely, if the fly is not on the surface of an object, their close neighbourhoods will usually be poorly correlated.

Figure

1: Pixels b1

and b2,

projections of fly B, get identical grey levels, while pixels a1

and a2,

projections of fly A, which receive their illumination from two

different physical points on the object’s surface, get different

grey levels.

The fitness function exploits this property and evaluates the degree of similarity of the pixel neighbourhoods of the projections of the fly, giving higher fitness values to those probably lying on objects surfaces. For instance, from this pair of images:

Figure 2: Pair of images

Figure 3: Flies found on the pair of images of figure 2

Flies and robotics

The Fly algorithm has been first demonstrated during the Paris RFIA Pattern Recognition and Artificial Intelligence conference in 2000, and its application to obstacle avoidance in Robotics with a matching real-time robot trajectory controller in 2002 during the Artificial Evolution Conference (Como, Italy). Its implementation into a real-scale mobile robot was demonstrated during the /CyberCars final project presentation in Antibes (June 7-11, 2004). The public could see the real time detection results on a screen while cameras' images from the scene were being processed.

In order to use the Fly algorithm in the field of assisted driving, we developed a strategy to make the program quantify the probability that an obstacle is in front of the cameras. Figure 1 shows flies gathering on obstacles, and figure 2 shows the same scene as interpreted by the algorithm in terms of obstacle probability. On figure 2, the probability of a collision with an obstacle in a given direction is as high as stains are numerous and dark in that direction. These results were obtained using two commercial CCD cameras and a Pentium 2GHz computer, with a population of 5000 flies and after 30 generations. One generation takes about 20 milliseconds.

A collaboration with the IMARA/LARA project (until March 2008), aimed at integrating control strategies based on the fly algorithm into a vehicle.

Figure 1. A pedestrian, 4 metres in front of the cameras

Figure 2. Probability of presence of near obstacles

SLAM

On a real-world robot, odometric data given by wheel rotation coders give an estimation of the robot's position. In most applications, wheel rotation is under control of the trajectory planner. The actual robot trajectory does not exactly match the target trajectory due to “trajectory noise” caused by factors such as wheel slipping, tyre wear or uneven ground surface. SLAM (Simultaneous Localisation And Mapping) allows the robot to use its vision capabilities to extract odometry information, progressively build a map of its environment and localise itself within this map. One of the main problems is how to deal with noise and errors on the positions of objects. We show a way the fly representation may be used by a mobile robot for its own localisation and build a map of its environment (SLAM). From two succesive pairs of images, we try to detezrmine the movements of the cameras. The flies are searched in the first pair of images as shown here:

First

pair of images.

The movement between the two positions is searched

for projecting the flies of this pair to the next pair as shown here:

Second

pair of images.

Medical applications of the Fly Algorithm

In collaboration with Jean Louchet and Franck Vidal.

Fly4PET: Fly Algorithm in PET Reconstruction for Radiotherapy Treatment Planning